3.9 KiB

Manga OCR

Optical character recognition for Japanese text, with the main focus being Japanese manga. It uses a custom end-to-end model built with Transformers' Vision Encoder Decoder framework.

Manga OCR can be used as a general purpose printed Japanese OCR, but its main goal was to provide a high quality text recognition, robust against various scenarios specific to manga:

- both vertical and horizontal text

- text with furigana

- text overlaid on images

- wide variety of fonts and font styles

- low quality images

Unlike many OCR models, Manga OCR supports recognizing multi-line text in a single forward pass, so that text bubbles found in manga can be processed at once, without splitting them into lines.

Installation

You need Python 3.6+.

If you want to run with GPU, install PyTorch as described here, otherwise this step can be skipped.

Run:

pip install manga-ocr

Usage

Running in the background

Manga OCR can run in the background, processing new images as they appear.

You might then use a tool like ShareX to manually capture a region of the screen and let the OCR read it either from the system clipboard, or a specified directory.

For example:

- To read images from clipboard and write recognized texts to clipboard, run:

manga_ocr - To read images from ShareX's screenshot folder, run:

manga_ocr "/path/to/sharex/screenshot/folder" - To see other options, run:

manga_ocr --help

If manga_ocr doesn't work, you might also try replacing it with python -m manga_ocr.

Python API

from manga_ocr import MangaOcr

mocr = MangaOcr()

text = mocr('/path/to/img')

or

import PIL.Image

from manga_ocr import MangaOcr

mocr = MangaOcr()

img = PIL.Image.open('/path/to/img')

text = mocr(img)

Usage tips

- OCR supports multi-line text, but the longer the text, the more likely some errors are to occur. If the recognition failed for some part of a longer text, you might try to run it on a smaller portion of the image.

- The model was trained specifically to handle manga well, but should do a decent job on other types of printed text, such as novels or video games. It probably won't be able to handle handwritten text though.

- The model always attempts to recognize some text on the image, even if there is none. Because it uses a transformer decoder (and therefore has some understanding of the Japanese language), it might even "dream up" some realistically looking sentences! This shouldn't be a problem for most use cases, but it might get improved in the next version.

Examples

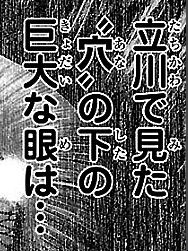

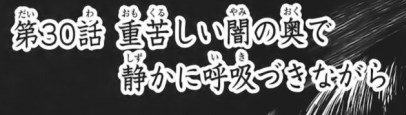

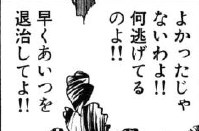

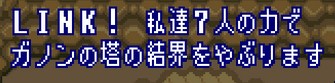

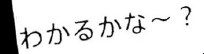

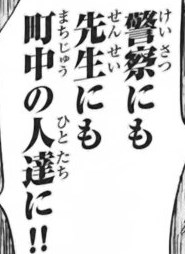

Here are some cherry-picked examples showing the capability of the model.

| image | Manga OCR result |

|---|---|

|

素直にあやまるしか |

|

立川で見た〝穴〟の下の巨大な眼は: |

|

実戦剣術も一流です |

|

第30話重苦しい闇の奥で静かに呼吸づきながら |

|

よかったじゃないわよ!何逃げてるのよ!!早くあいつを退治してよ! |

|

ぎゃっ |

|

ピンポーーン |

|

LINK!私達7人の力でガノンの塔の結界をやぶります |

|

ファイアパンチ |

|

少し黙っている |

|

わかるかな〜? |

|

警察にも先生にも町中の人達に!! |

Acknowledgments

This project was done with the usage of Manga109-s dataset.